Difference between revisions of "Rule Sets FAQ"

(→How I create a regex pattern for Registered, Trademark, Copyright symbols?) |

|||

| (28 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| + | ===Can I modify the Default Rule Sets?=== |

||

| + | |||

| + | If you are hosting the Globalyzer Server, the Administrator user can modify any of the Default Rule Sets. |

||

| + | |||

| + | If Lingoport is hosting the Globalyzer Server, you will not be able to modify the Default Rule Sets, but you can modify one of your own Rule Sets and then allow your team members to either use it (sharing) or copy it. This Rule Set can then be the starting point for their internationalization scanning and filtering process. |

||

| + | |||

| + | ===In what order are Rule Set detection and filtering applied?=== |

||

| + | There are four categories of results: Embedded Strings, Locale-Sensitive Methods, General Patterns, and Static File References. |

||

| + | |||

| + | For Embedded Strings, all strings are found as issues initially, then the string filters are run (all except String Method Filter) to see what should be filtered (String Line Filter, String Literal Filter, and String Operand Filter), and then the detection patterns are run to see what should be retained (String Method Pattern, String Operand Pattern, and String Retention Pattern). Then String Method Filter is run last. We used to run String Method Filters along with the other filters, but found in practice that String Method Filters should trump all detections, and be run last. |

||

| + | |||

| + | For the other three categories, the patterns are run first to find the issues, and then the filters are run to remove. |

||

| + | |||

| + | ===I changed the Rule Set but the Workbench keeps using the old Rule Set. How can I use the updated Rule Set?=== |

||

| + | When rule sets are modified on the server, select <b><code>Project->Reload Rule</code></b> Sets to refresh the client. |

||

| + | |||

| + | ===We have lots of Rule Sets: Is there a way to organize Rule Set to better manage them?=== |

||

| + | Globalyzer supports <b>Inherited Rule Sets</b>. |

||

| + | |||

| + | Base rule sets can be created and maintained by an individual and then project level rule sets can extend the base rule set. The extended rule set would have everything from the base rule set, plus whatever is added/modified. |

||

| + | |||

| + | When an individual introduces a new rule or modifies a rule in the project level rule set, other projects wouldn't be affected. |

||

| + | |||

| + | ===Where can I find help on “General Pattern” issues found in C++ code scanning?=== |

||

| + | |||

| + | If you login to the Globalyzer Server and look at the General Patterns for your rule set, it will often give information on why Globalyzer is scanning for this pattern. In addition, if you go to the Help system on the Globalyzer Server, there are various topics on C++ internationalization. In particular, click on '''Reference->Locale-Sensitive Methods->C++ Programming Language->C++ Rule Sets'''. This help page talks about Unicode support in the various C++ rule sets. For example, usually a C++ program will be compiled with single-byte character strings. These single-bytes cannot support Unicode characters, which require more than 1 byte. That is the main reason why our C++ General Patterns scan for character strings: You will have to make sure to modify them if they are to hold Unicode strings. |

||

| + | |||

===Does Globalyzer fix JavaScript locale-sensitive method issues?=== |

===Does Globalyzer fix JavaScript locale-sensitive method issues?=== |

||

| Line 13: | Line 40: | ||

===How do I add new JavaScript locale-sensitive methods or modify the description and help for existing methods?=== |

===How do I add new JavaScript locale-sensitive methods or modify the description and help for existing methods?=== |

||

| − | If you have a Globalyzer Team Server license, you can add to or modify the default |

+ | If you have a Globalyzer Team Server license, you can add to or modify the default Locale-Sensitive Methods for each programming language so that your users will also see your changes whenever they create a new Rule Set. If you’re using our hosted [http://www.globalyzer.com globalyzer.com] server, you can add to or modify the Locale-Sensitive Methods of a specific Rule Set that you create and then share with other Globalyzer users that are part of your team. That way, your team members will benefit from the work you have done in determining the resolution for Locale-Sensitive Method issues. This approach applies to all Rule Set rules, such as General Patterns, Static File References, and Embedded Strings. |

| + | |||

| + | ===When you create your rule set, can you specify the file extensions you would like scanned?=== |

||

| + | The default for a java rule set is to scan files with the following extensions: java, jsp, jspf, and jspx. If you are only interested in jsp files, you can disable the others. Steps to do this: |

||

| + | |||

| + | 1) Log in to the server and select your java rule set |

||

| + | |||

| + | 2) Select Configure Source File Extensions |

||

| + | |||

| + | 3) Uncheck the file extensions you are not interested in |

||

| + | |||

| + | |||

| + | You can also configure the scan to look at only certain directories in your project. Steps to do this: |

||

| + | |||

| + | 1) Log into the client. |

||

| + | |||

| + | 2) Select your project in Project Explorer and select Scan->Manage Scans |

||

| + | |||

| + | 3) Select the java scan and select Modify. |

||

| + | |||

| + | 4) Select the specific directories to scan, not just the entire project, and Finish |

||

| + | |||

| + | |||

| + | You can run your scan by selecting Scan->Single Scan. |

||

| + | |||

| + | ===How do I create a rule set for the 'C' language?=== |

||

| + | |||

| + | For the C language, you should choose one of our C++ variants. The main ones are '''ANSI UTF-8''', '''ANSI UTF-16''', '''Cross Platform UTF-8''', '''Cross Platform UTF-16''', '''Windows Generic''', '''Windows MBCS''', and '''Windows Unicode'''. If you are using GNU C, you will want to use one of the ANSI rule sets. UTF-8 if that’s how you want to support Unicode; UTF-16 if you will be using wide-character calls to support UTF-16 Unicode. If you are just running on Windows, then you can choose a Windows variant. If you’ll be running on both, then you’ll need a cross-platform rule set. The difference between the variants is the list of locale-sensitive methods Globalyzer will scan for in your code. To get a better feel, you can create a few rule set with the different variants and look at the locale-sensitive methods defined. |

||

| + | |||

| + | ===How to define a regex pattern in a rule set=== |

||

| + | |||

| + | When specifying regex patterns with UTF-8 characters, you need to specify the characters like this: '''\uXXXX''' where XXXX is the hexidecimal number for the character. |

||

| + | |||

| + | For example, if I have this string: '''"中国"''' |

||

| + | Then I would specify this general pattern to find it: '''\u4E2D\u56FD''' |

||

| + | |||

| + | http://www.regular-expressions.info/unicode.html |

||

| + | |||

| + | ===How do I create a regex pattern for Registered, Trademark, Copyright symbols?=== |

||

| + | |||

| + | To detect/filter characters such as ® (Registered), please use the Unicode code point in the regex. For instance, |

||

| + | * ® (Registered): \u00AE |

||

| + | * ™ (Trademark): \u2122 |

||

| + | * © (Copyright): \u00A9 |

||

| + | |||

| + | ===Searching for characters in a specified language=== |

||

| + | |||

| + | It may be useful to detect all strings that contain characters from a specific language. For instance, finding all strings of Chinese characters within an application. This can be done using Unicode scripts. Here are a few examples: |

||

| + | |||

| + | Chinese: \p{script=Han} |

||

| + | |||

| + | Korean: \p{script=Hangul} |

||

| + | |||

| + | |||

| + | Some languages, such as Japanese, combine multiple scripts. |

||

| + | |||

| + | Japanese: [\p{script=Hiragana}\p{script=Katakana}\p{script=Han}] |

||

| + | |||

| + | ===Searching for characters in a specified language with character ranges=== |

||

| + | |||

| + | Unicode scripts are not the only way to find Characters within a specified language. While they are the simplest means to do this, they are not supported in all environments. For instance, Java 1.6 does not support regex searches using Unicode scripts. |

||

| + | |||

| + | Another solution is to use Unicode character ranges. For instance, CJK Unified Ideographs represent the most common Chinese characters. The basic set of CJK Unified Ideographs are all contained within the character range \u4e00-\u9fd5. To search for strings containing these characters, create a String Retention Pattern with the following regex pattern: |

||

| + | |||

| + | [\u4e00-\u9fd5]+ |

||

| + | |||

| + | Additional Chinese Ideograph characters fall within the ranges of: |

||

| + | |||

| + | * \u3400-\u4db5 (CJK Unifed Ideographs extension a) |

||

| + | * \u20000-\u2a6d6 (CJK Unifed Ideographs extension b) |

||

| + | * \u2a700-\u2b734 (CJK Unifed Ideographs extension c) |

||

| + | |||

| + | Multiple character ranges can be used to create a single expanded character set, like so: |

||

| + | |||

| + | [\u4e00-\u9fd5\u3400-\u4db5\u20000-\u2a6d6\u2a700-\u2b734]+ |

||

| + | |||

| + | The above regex expression will find strings of one or more Chinese characters from any of the Ideograph sets. |

||

| + | |||

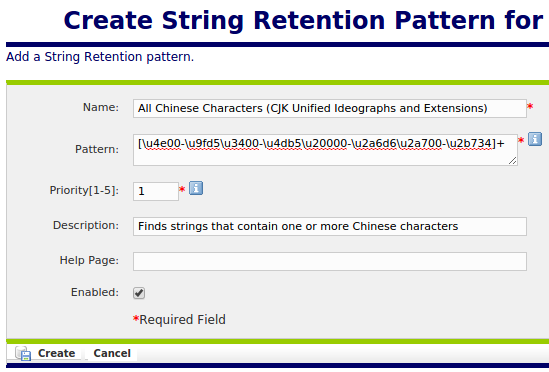

| + | For Example: |

||

| + | [[File:ChineseStringRetentionPatternAllChineseCharacters.png]] |

||

| + | |||

| + | ===Character Ranges for Korean, Chinese and Japanese=== |

||

| + | ====Korean (Hangul)==== |

||

| + | * (Hex 1100-11ff) (Decimal 4352-4607) [http://www.unicode.org/charts/PDF/U1100.pdf (Jamo)] |

||

| + | * (Hex a960-a97c) (Decimal 43360-43388) [http://www.unicode.org/charts/PDF/UA960.pdf (Jamo extended a)] |

||

| + | * (Hex d7b0-d7ff) (Decimal 55216-55295) [http://www.unicode.org/charts/PDF/UD7B0.pdf (Jamo extended b)] |

||

| + | * (Hex 3130-318f) (Decimal 12592-12687) [http://www.unicode.org/charts/PDF/U3130.pdf (Hangul compatibility Jamo)] |

||

| + | * (Hex ff00-ffef) (Decimal 65280-65519) [http://www.unicode.org/charts/PDF/UFF00.pdf (half width and full width forms, includes english alphabet)] |

||

| + | |||

| + | ====Chinese (Han)==== |

||

| + | * (Hex 4e00-9fd5) (Decimal 19968-40917) [http://www.unicode.org/charts/PDF/U4E00.pdf (CJK Unified Ideographs, ~500 page pdf)] |

||

| + | * (Hex 3400-4db5) (Decimal 13312-19893) [http://www.unicode.org/charts/PDF/U3400.pdf (CJK Unified Ideographs ext. a, ~100 page pdf)] |

||

| + | * (Hex 20000-2a6d6) (Decimal 131072-173782) [http://www.unicode.org/charts/PDF/U20000.pdf (CJK Unified Ideographs ext. b, ~400 page pdf)] |

||

| + | * (Hex 2a700-2b734) (Decimal 173824-177972) [http://www.unicode.org/charts/PDF/U2A700.pdf (CJK Unified Ideographs ext. c, ~40 page pdf)] |

||

| + | |||

| + | ====Japanese (Katakana, Hiragana, Kanji)==== |

||

| + | =====At a glance:===== |

||

| + | * (Hex 3000-30ff) (Decimal 12288-12543) ([http://www.unicode.org/charts/PDF/U3000.pdf Punctuation],[http://www.unicode.org/charts/PDF/U3040.pdf Hiragana],[http://www.unicode.org/charts/PDF/U30A0.pdf Katakana]) |

||

| + | * (Hex 31f0-31ff) (Decimal 12784-12799) [http://www.unicode.org/charts/PDF/U31F0.pdf (Katakana phonetic extensions)] |

||

| + | * (Hex 1b000-1b0ff) (Decimal 110592-110847) [http://www.unicode.org/charts/PDF/U1B000.pdf (Katakana supplement)] |

||

| + | * (Hex 3400-9faf) (Decimal 13312-40879) ([http://www.rikai.com/library/kanjitables/kanji_codes.unicode.shtml Common / Uncommon Kanji] and [http://www.rikai.com/library/kanjitables/kanji_codes.unicode.shtml Rare Kanji]) |

||

| + | ** Exclude 4db1-4dff if you wish to avoid a section between sets of Kanji |

||

| + | * (Hex ff00-ffef) (Decimal 65280-65519) [http://www.unicode.org/charts/PDF/UFF00.pdf (Half width and full width forms, includes english alphabet)] |

||

| + | |||

| + | =====Full details:===== |

||

| + | * (Hex 3000-303f) (Decimal 12288-12351) [http://www.unicode.org/charts/PDF/U3000.pdf (Punctuation)] |

||

| + | * (Hex 3040-309f) (Decimal 12352-12447) [http://www.unicode.org/charts/PDF/U3040.pdf (Hiragana)] |

||

| + | * (Hex 30a0-30ff) (Decimal 12448-12543) [http://www.unicode.org/charts/PDF/U30A0.pdf (Katakana)] |

||

| + | * (Hex 31f0-31ff) (Decimal 12784-12799) [http://www.unicode.org/charts/PDF/U31F0.pdf (Katakana phonetic extensions)] |

||

| + | |||

| + | * (Hex 1b000-1b0ff) (Decimal 110592-110847) [http://www.unicode.org/charts/PDF/U1B000.pdf (Katakana supplement)] |

||

| + | |||

| + | * (Hex 4e00-9faf) (Decimal 19968-40879) [http://www.rikai.com/library/kanjitables/kanji_codes.unicode.shtml (Common and Uncommon Kanji)] |

||

| + | * (Hex 3400-4dbf) (Decimal 13312-19903) [http://www.rikai.com/library/kanjitables/kanji_codes.unicode.shtml (Rare Kanji)] |

||

| + | |||

| + | * (Hex ff60-ffdf) (Decimal 65376-65503) [http://www.unicode.org/charts/PDF/UFF00.pdf (Half width Japanese punctuation and Katakana)] |

||

| + | * (Hex ff00-ffef) (Decimal 65280-65519) [http://www.unicode.org/charts/PDF/UFF00.pdf (Half width and full width forms, includes english alphabet)] |

||

Latest revision as of 17:40, 13 March 2018

Contents

- 1 Can I modify the Default Rule Sets?

- 2 In what order are Rule Set detection and filtering applied?

- 3 I changed the Rule Set but the Workbench keeps using the old Rule Set. How can I use the updated Rule Set?

- 4 We have lots of Rule Sets: Is there a way to organize Rule Set to better manage them?

- 5 Where can I find help on “General Pattern” issues found in C++ code scanning?

- 6 Does Globalyzer fix JavaScript locale-sensitive method issues?

- 7 What is the fix for the JavaScript locale-sensitive method charAt()?

- 8 When will internationalization help be added for JavaScript locale-sensitive methods?

- 9 How do I add new JavaScript locale-sensitive methods or modify the description and help for existing methods?

- 10 When you create your rule set, can you specify the file extensions you would like scanned?

- 11 How do I create a rule set for the 'C' language?

- 12 How to define a regex pattern in a rule set

- 13 How do I create a regex pattern for Registered, Trademark, Copyright symbols?

- 14 Searching for characters in a specified language

- 15 Searching for characters in a specified language with character ranges

- 16 Character Ranges for Korean, Chinese and Japanese

Can I modify the Default Rule Sets?

If you are hosting the Globalyzer Server, the Administrator user can modify any of the Default Rule Sets.

If Lingoport is hosting the Globalyzer Server, you will not be able to modify the Default Rule Sets, but you can modify one of your own Rule Sets and then allow your team members to either use it (sharing) or copy it. This Rule Set can then be the starting point for their internationalization scanning and filtering process.

In what order are Rule Set detection and filtering applied?

There are four categories of results: Embedded Strings, Locale-Sensitive Methods, General Patterns, and Static File References.

For Embedded Strings, all strings are found as issues initially, then the string filters are run (all except String Method Filter) to see what should be filtered (String Line Filter, String Literal Filter, and String Operand Filter), and then the detection patterns are run to see what should be retained (String Method Pattern, String Operand Pattern, and String Retention Pattern). Then String Method Filter is run last. We used to run String Method Filters along with the other filters, but found in practice that String Method Filters should trump all detections, and be run last.

For the other three categories, the patterns are run first to find the issues, and then the filters are run to remove.

I changed the Rule Set but the Workbench keeps using the old Rule Set. How can I use the updated Rule Set?

When rule sets are modified on the server, select Project->Reload Rule Sets to refresh the client.

We have lots of Rule Sets: Is there a way to organize Rule Set to better manage them?

Globalyzer supports Inherited Rule Sets.

Base rule sets can be created and maintained by an individual and then project level rule sets can extend the base rule set. The extended rule set would have everything from the base rule set, plus whatever is added/modified.

When an individual introduces a new rule or modifies a rule in the project level rule set, other projects wouldn't be affected.

Where can I find help on “General Pattern” issues found in C++ code scanning?

If you login to the Globalyzer Server and look at the General Patterns for your rule set, it will often give information on why Globalyzer is scanning for this pattern. In addition, if you go to the Help system on the Globalyzer Server, there are various topics on C++ internationalization. In particular, click on Reference->Locale-Sensitive Methods->C++ Programming Language->C++ Rule Sets. This help page talks about Unicode support in the various C++ rule sets. For example, usually a C++ program will be compiled with single-byte character strings. These single-bytes cannot support Unicode characters, which require more than 1 byte. That is the main reason why our C++ General Patterns scan for character strings: You will have to make sure to modify them if they are to hold Unicode strings.

Does Globalyzer fix JavaScript locale-sensitive method issues?

Globalyzer detects methods that could be an issue when supporting multiple languages, but has no specific fixing built in. This is because it’s not always clear that the method is an actual issue and the fix may involve some reworking that requires manual decisions. However, for some programming languages, we have written internationalization (i18n) help for the method that explains the reason for the detection as well as suggestions on what change might need to be made. When we don’t provide specific i18n help, we provide links to external help on the method, which sometimes provide information about i18n considerations.

What is the fix for the JavaScript locale-sensitive method charAt()?

In this case, Globalyzer detected charAt because it is a method that indexes into a string. If that string contains a translation, then the location of the character may have changed or it may not be the same character. The fix is really dependent on the usage. If the string is locale-independent, then you can insert an Ignore This Line comment so that Globalyzer will no longer flag this issue.

When will internationalization help be added for JavaScript locale-sensitive methods?

We are always pressed to get more features into Globalyzer, but do try to spend as much time as possible adding to the help. In the meantime, if you have any specific questions, you should email support@lingoport.com and we’ll get an answer for you right away!

How do I add new JavaScript locale-sensitive methods or modify the description and help for existing methods?

If you have a Globalyzer Team Server license, you can add to or modify the default Locale-Sensitive Methods for each programming language so that your users will also see your changes whenever they create a new Rule Set. If you’re using our hosted globalyzer.com server, you can add to or modify the Locale-Sensitive Methods of a specific Rule Set that you create and then share with other Globalyzer users that are part of your team. That way, your team members will benefit from the work you have done in determining the resolution for Locale-Sensitive Method issues. This approach applies to all Rule Set rules, such as General Patterns, Static File References, and Embedded Strings.

When you create your rule set, can you specify the file extensions you would like scanned?

The default for a java rule set is to scan files with the following extensions: java, jsp, jspf, and jspx. If you are only interested in jsp files, you can disable the others. Steps to do this:

1) Log in to the server and select your java rule set

2) Select Configure Source File Extensions

3) Uncheck the file extensions you are not interested in

You can also configure the scan to look at only certain directories in your project. Steps to do this:

1) Log into the client.

2) Select your project in Project Explorer and select Scan->Manage Scans

3) Select the java scan and select Modify.

4) Select the specific directories to scan, not just the entire project, and Finish

You can run your scan by selecting Scan->Single Scan.

How do I create a rule set for the 'C' language?

For the C language, you should choose one of our C++ variants. The main ones are ANSI UTF-8, ANSI UTF-16, Cross Platform UTF-8, Cross Platform UTF-16, Windows Generic, Windows MBCS, and Windows Unicode. If you are using GNU C, you will want to use one of the ANSI rule sets. UTF-8 if that’s how you want to support Unicode; UTF-16 if you will be using wide-character calls to support UTF-16 Unicode. If you are just running on Windows, then you can choose a Windows variant. If you’ll be running on both, then you’ll need a cross-platform rule set. The difference between the variants is the list of locale-sensitive methods Globalyzer will scan for in your code. To get a better feel, you can create a few rule set with the different variants and look at the locale-sensitive methods defined.

How to define a regex pattern in a rule set

When specifying regex patterns with UTF-8 characters, you need to specify the characters like this: \uXXXX where XXXX is the hexidecimal number for the character.

For example, if I have this string: "中国" Then I would specify this general pattern to find it: \u4E2D\u56FD

http://www.regular-expressions.info/unicode.html

How do I create a regex pattern for Registered, Trademark, Copyright symbols?

To detect/filter characters such as ® (Registered), please use the Unicode code point in the regex. For instance,

- ® (Registered): \u00AE

- ™ (Trademark): \u2122

- © (Copyright): \u00A9

Searching for characters in a specified language

It may be useful to detect all strings that contain characters from a specific language. For instance, finding all strings of Chinese characters within an application. This can be done using Unicode scripts. Here are a few examples:

Chinese: \p{script=Han}

Korean: \p{script=Hangul}

Some languages, such as Japanese, combine multiple scripts.

Japanese: [\p{script=Hiragana}\p{script=Katakana}\p{script=Han}]

Searching for characters in a specified language with character ranges

Unicode scripts are not the only way to find Characters within a specified language. While they are the simplest means to do this, they are not supported in all environments. For instance, Java 1.6 does not support regex searches using Unicode scripts.

Another solution is to use Unicode character ranges. For instance, CJK Unified Ideographs represent the most common Chinese characters. The basic set of CJK Unified Ideographs are all contained within the character range \u4e00-\u9fd5. To search for strings containing these characters, create a String Retention Pattern with the following regex pattern:

[\u4e00-\u9fd5]+

Additional Chinese Ideograph characters fall within the ranges of:

- \u3400-\u4db5 (CJK Unifed Ideographs extension a)

- \u20000-\u2a6d6 (CJK Unifed Ideographs extension b)

- \u2a700-\u2b734 (CJK Unifed Ideographs extension c)

Multiple character ranges can be used to create a single expanded character set, like so:

[\u4e00-\u9fd5\u3400-\u4db5\u20000-\u2a6d6\u2a700-\u2b734]+

The above regex expression will find strings of one or more Chinese characters from any of the Ideograph sets.

Character Ranges for Korean, Chinese and Japanese

Korean (Hangul)

- (Hex 1100-11ff) (Decimal 4352-4607) (Jamo)

- (Hex a960-a97c) (Decimal 43360-43388) (Jamo extended a)

- (Hex d7b0-d7ff) (Decimal 55216-55295) (Jamo extended b)

- (Hex 3130-318f) (Decimal 12592-12687) (Hangul compatibility Jamo)

- (Hex ff00-ffef) (Decimal 65280-65519) (half width and full width forms, includes english alphabet)

Chinese (Han)

- (Hex 4e00-9fd5) (Decimal 19968-40917) (CJK Unified Ideographs, ~500 page pdf)

- (Hex 3400-4db5) (Decimal 13312-19893) (CJK Unified Ideographs ext. a, ~100 page pdf)

- (Hex 20000-2a6d6) (Decimal 131072-173782) (CJK Unified Ideographs ext. b, ~400 page pdf)

- (Hex 2a700-2b734) (Decimal 173824-177972) (CJK Unified Ideographs ext. c, ~40 page pdf)

Japanese (Katakana, Hiragana, Kanji)

At a glance:

- (Hex 3000-30ff) (Decimal 12288-12543) (Punctuation,Hiragana,Katakana)

- (Hex 31f0-31ff) (Decimal 12784-12799) (Katakana phonetic extensions)

- (Hex 1b000-1b0ff) (Decimal 110592-110847) (Katakana supplement)

- (Hex 3400-9faf) (Decimal 13312-40879) (Common / Uncommon Kanji and Rare Kanji)

- Exclude 4db1-4dff if you wish to avoid a section between sets of Kanji

- (Hex ff00-ffef) (Decimal 65280-65519) (Half width and full width forms, includes english alphabet)

Full details:

- (Hex 3000-303f) (Decimal 12288-12351) (Punctuation)

- (Hex 3040-309f) (Decimal 12352-12447) (Hiragana)

- (Hex 30a0-30ff) (Decimal 12448-12543) (Katakana)

- (Hex 31f0-31ff) (Decimal 12784-12799) (Katakana phonetic extensions)

- (Hex 1b000-1b0ff) (Decimal 110592-110847) (Katakana supplement)

- (Hex 4e00-9faf) (Decimal 19968-40879) (Common and Uncommon Kanji)

- (Hex 3400-4dbf) (Decimal 13312-19903) (Rare Kanji)

- (Hex ff60-ffdf) (Decimal 65376-65503) (Half width Japanese punctuation and Katakana)

- (Hex ff00-ffef) (Decimal 65280-65519) (Half width and full width forms, includes english alphabet)